Transcribe - The Road to PS5 - Mark Cerny's a deep dive into the PlayStation 5 -

Thank you Jim.

There will be lots of chances later on this year to look at the PlayStation 5 games.

Today I want to talk a bit about our goals for the PlayStation 5 hardware and how they influenced the development of the console.

I think you all know I'm a big believer in console generations once every 5 or 6 or 7 years a console arrives with substantially new capabilities. There's a lot of learning by the game developers hopefully not too overwhelming and soon there's games that could never have been created before.

Now it used to be that as a console designer you'd somehow Intuit what would be the best set of capabilities for the new console and then build it in complete secrecy.

For the PlayStation consoles that period lasted through PlayStation 3 a powerful and groundbreaking console but also one that caused quite a lot of heartache as it was initially difficult to develop games for.

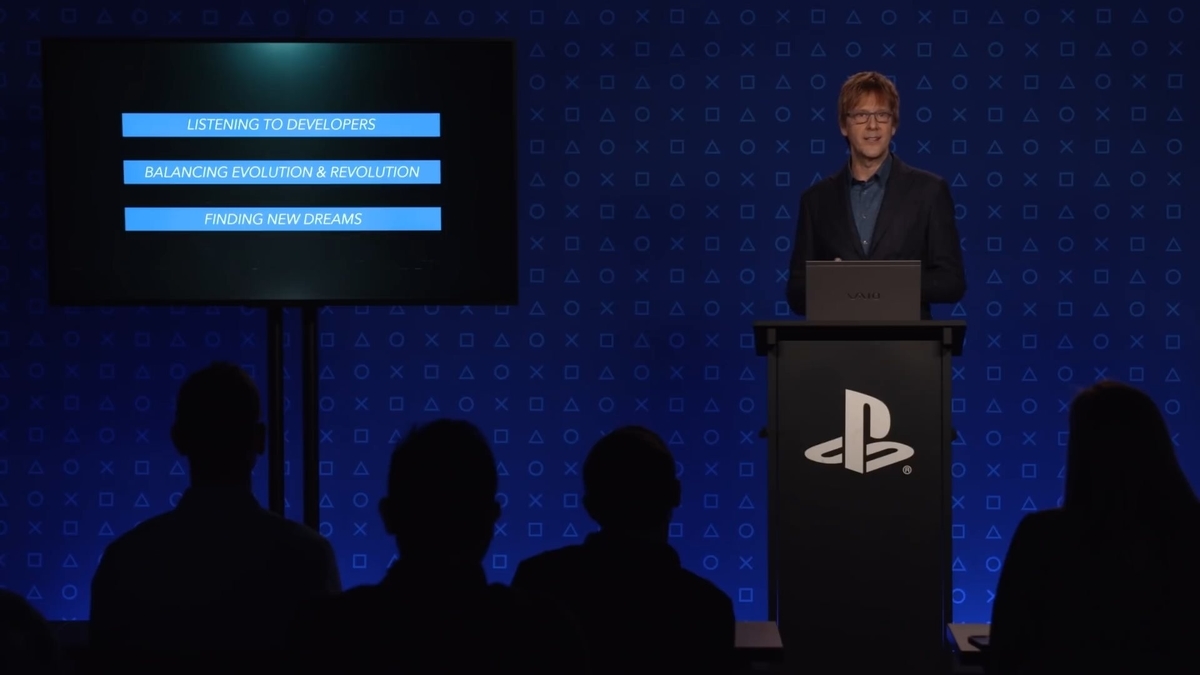

So starting with PlayStation 4 we've taken a different approach roughly centered around three principles.

The first of these is listening to the developers which is to say that a lot of what we put into a console derives directly from the needs and aspirations of the game creators.

We definitely do have some ideas of our own but at the core of our philosophy for designing consoles is that game players are here for the fantastic games.

Which is to say that game creators matter anything we can do to make life easier for the game creators or help them realize their dreams we will do.

So about once every two years I take a tour of the industry.

I go to the various developers and publishers sit down and discuss how they're doing with the current consoles and what they'd like to see in future consoles.

This requires weeks on the road as reaching the bulk of the game creators involves talking to well over a hundred people it's something like two dozen publishers and developers.

And it is incredibly valuable.

By the way the feature most requested by the developers that was an SSD.

Which we were very happy to put in hardware but a lot of problem solving was required.

I'll be doing a deep dive on the SSD and surrounding systems later on in this

talk.

It's also key to make a generational leaf while keeping the console sufficiently familiar to game developers.

I think about this in terms of balancing evolution and revolution.

Now with Playstation 1, 2 and 3 the target was a revolution each time with a brand new feature set.

That was great in many ways but time for the developers to get up and running got longer with each console.

In the past I've called this time to triangle.

Here's what I had for those three consoles.

To be clear I'm not talking about time to make a game developers will be ambitious and it may take them six years or so to realize their vision what I'm talking about is that dead time before graphics and other aspects of game development are up and running and trying to minimize that.

On the other hand if we're trying to reduce that dead time to 0 that means the hardware architecture can't change at all we're handcuffed we need to judge for each feature what value it adds and whether it's worth the increase in developer time needed to support it.

So with Playstation 4 we were able to strike a pretty good balance between performance and familiarity we got required learning back to PlayStation 1 levels.

With PS5 the GPU was definitely the area that we felt the most tension between adding new features and keeping a familiar programming model.

Ultimately I think we've ended up with something under a month of getting up to speed that feels like we're striking about the right balance i'll go into a bit more detail later today about our philosophy with the GPU and the specific feature set that resulted from it.

It's also very important for us as the hardware team to find new dreams by which I mean something other than CPU performance GPU performance and the amount of RAM.

The increase in graphics performance over the past two decades has been astonishing but there are other areas in which we can innovate and provide a significant value to the game creators and through them the players.

That's why the SSD was very much on our list of directions to explore regardless of what came out of the conversations with game developers and publishers.

The biggest feature in this category is the custom engine for audio that's today's final topic.

The push for vastly improved audio and in particular 3d audio isn't something that came out of the developer meetings it's much more the case that we had a dream of what might be possible five years from now and then worked out a number of steps we could take to set us on that path.

So here again are the three principles the first being enabling the desires of developers to drive the hardware design.

To me the SSD really is the key to the next generation.

It's a game changer.

And it was the number one ask from developers for PlayStation 5 as in we know it's probably impossible but can you put an SSD in it.

That was a discussion we were also having internally it was clear that the presence of a hard drive in every PlayStation 4 was having a positive impact a lot of things that would simply have been impossible at blu-ray disc speeds were now possible.

At the same time though in 2015 and 2016 when we were having these conversations developers were already banging up against the limits of the hard drive and a lot of developer time was being spent designing around slow load speeds.

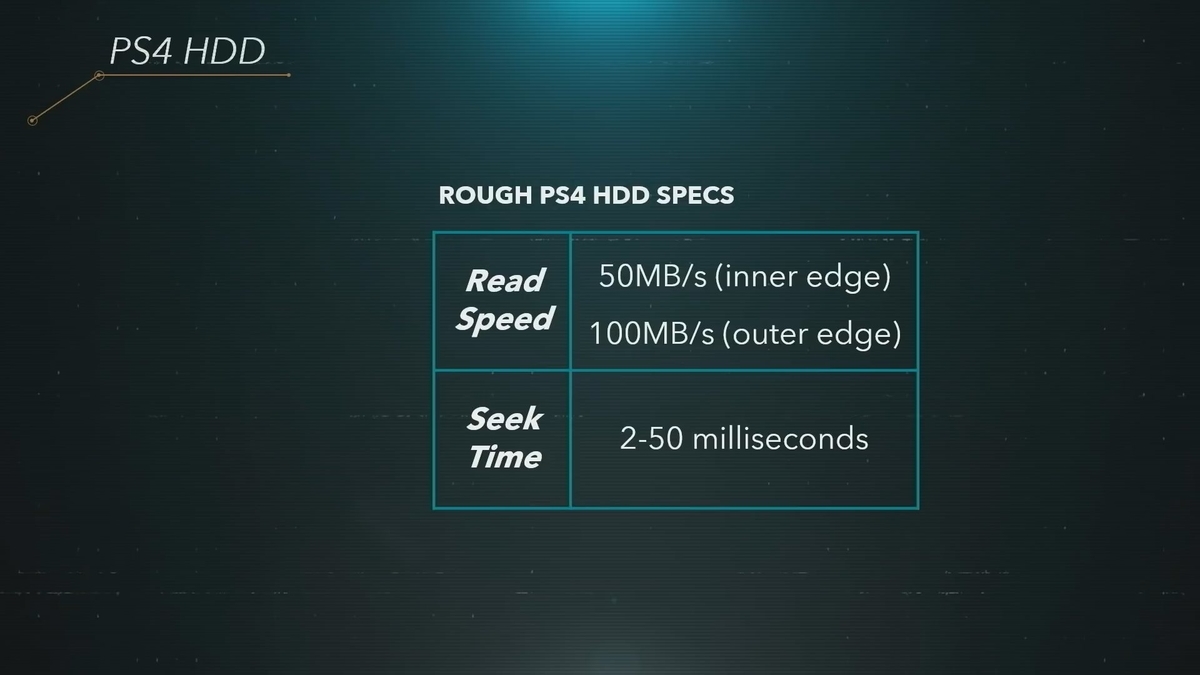

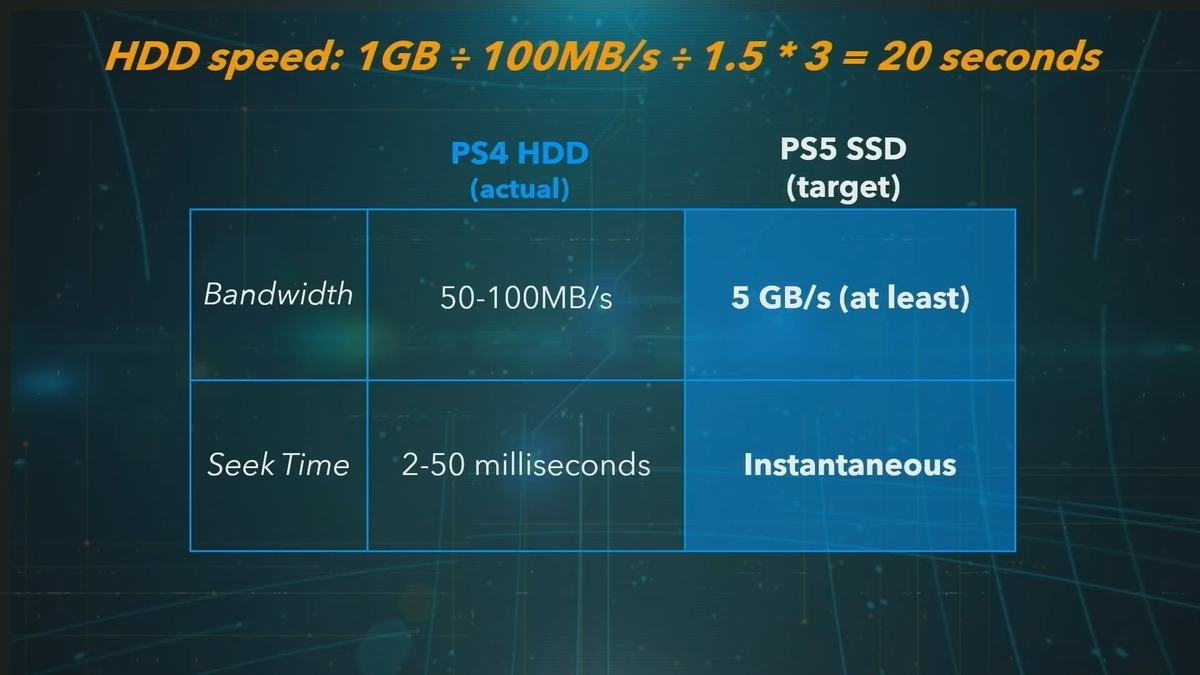

I want to focus in on just one number here which is how long it takes to load a gigabyte of data from a hard drive.

The difficulty being that hard drives are neither particularly fast nor flexible.

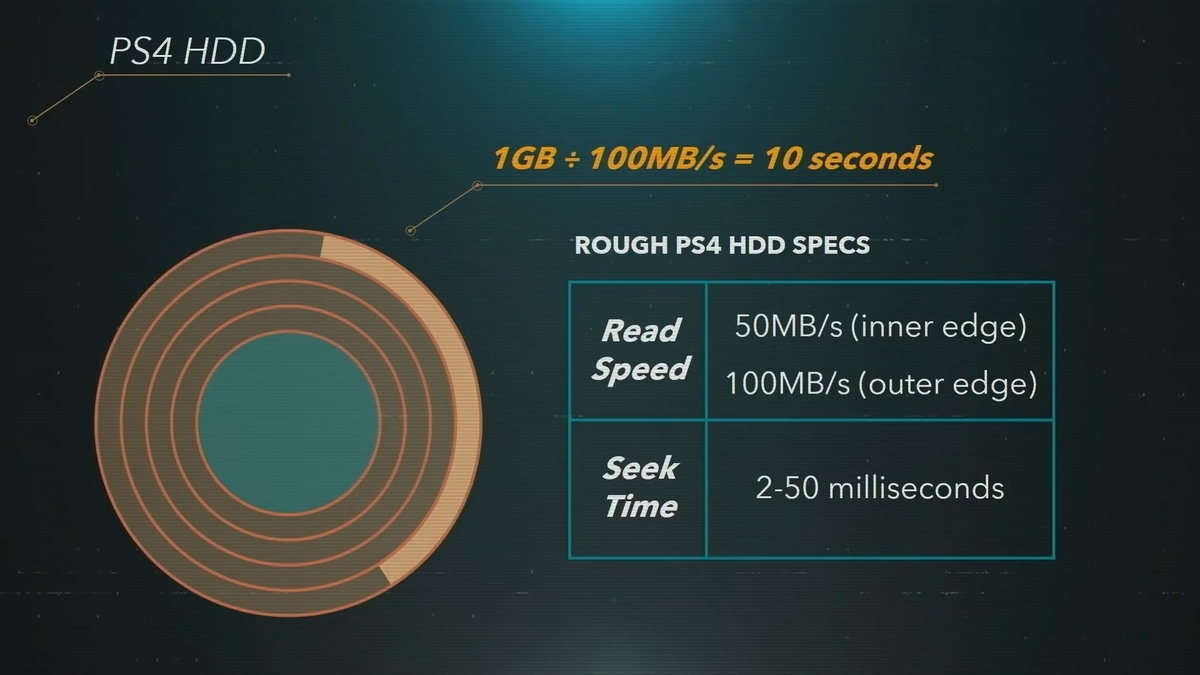

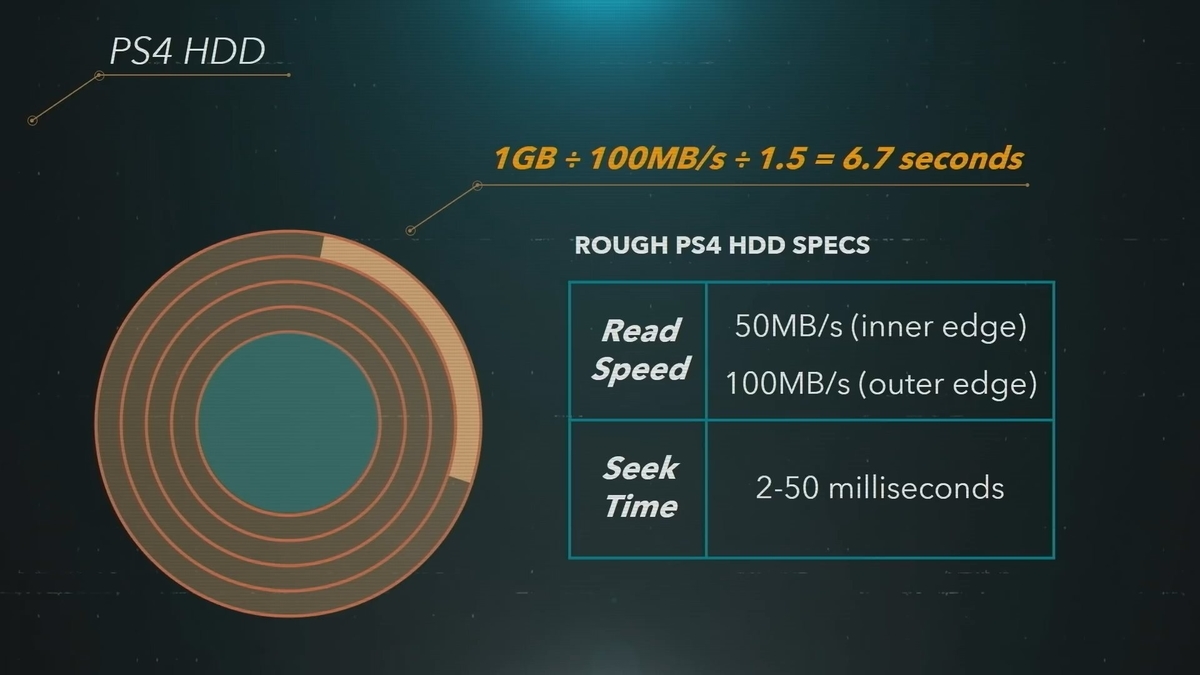

If all your data is in one block which is frankly not very likely you can load 50 to 100 megabytes a second depending on where the data is located on the hard drive.

Let's assume it's on the outer edge which means loading a gigabyte takes 10 seconds.

If you compress your game packages you can fit more data on the blu-ray disc and also effectively boost your hard drive read speed by the compression ratio.

We support Zlib decompression on PlayStation 4 that gets you something like 50% more data on the disk and 50% higher effective read speed.

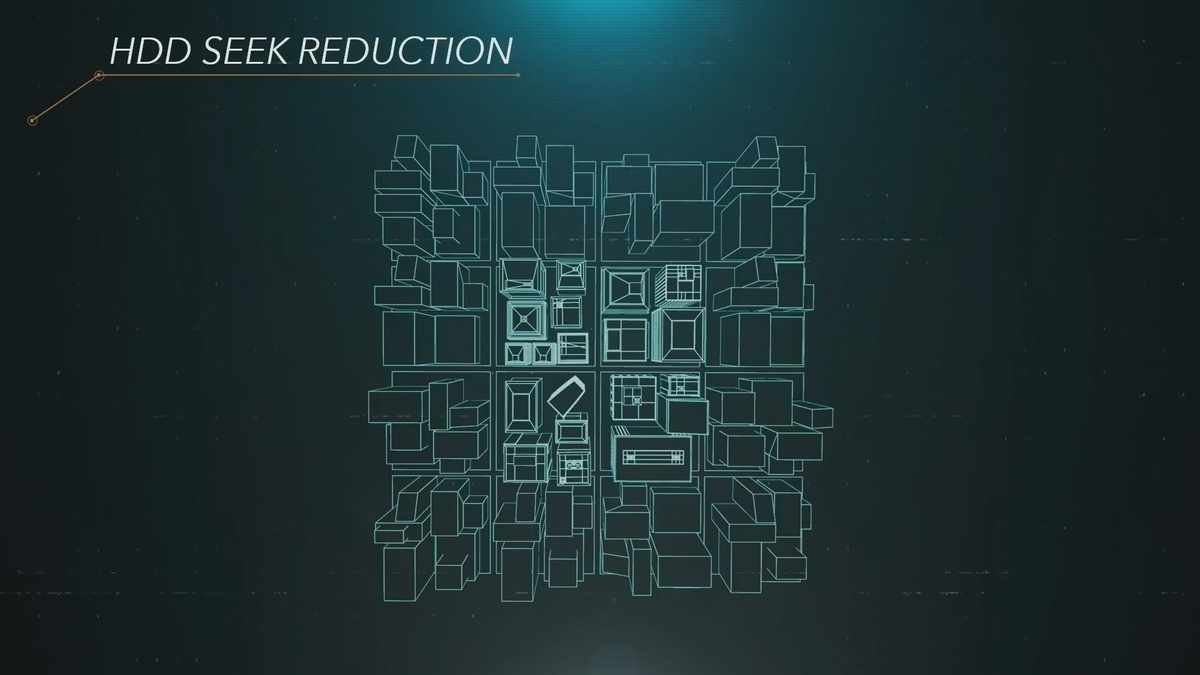

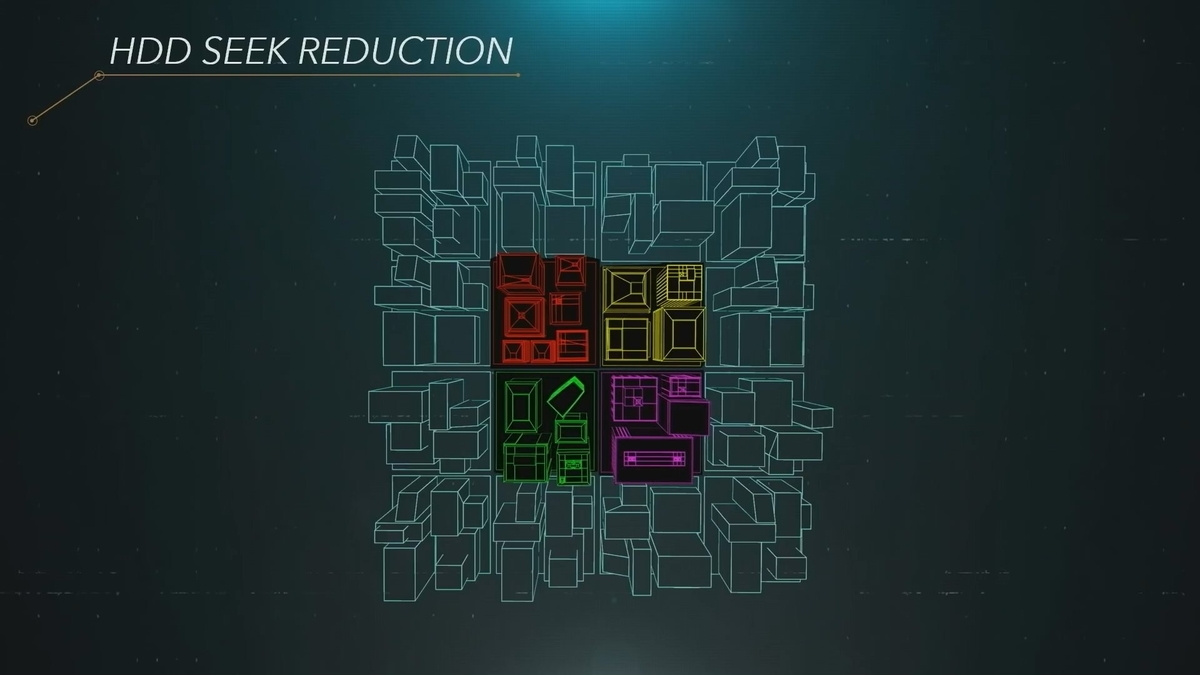

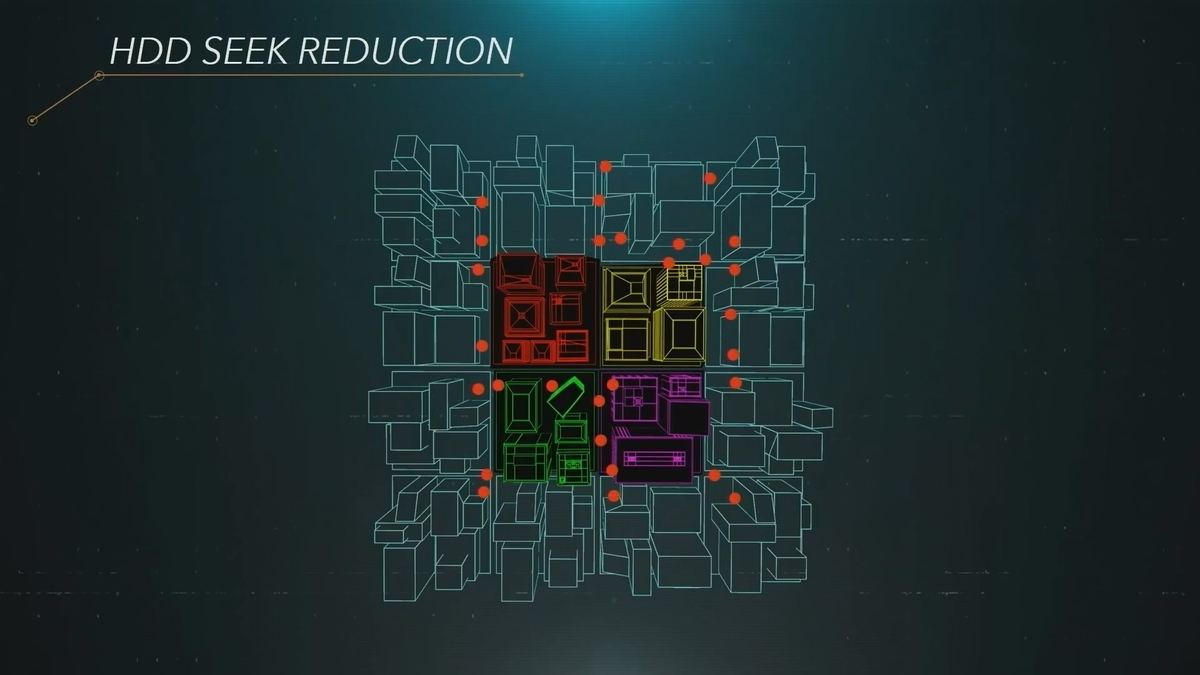

Unfortunately though it's highly likely that your data is scattered around in files on the hard drive as well as sourced from multiple locations within those files.

So lots of Seeks are needed at 2 to 50 milliseconds each.

My rule of thumb is that the hard drive is spending two-thirds of its time seeking and only a third of its time actually loading data.

Putting all of that together a gigabyte is very roughly 20 seconds to load from a hard drive.

Now a gigabyte is not much data games are using 5 or 6 gigabytes of RAM on PlayStation 4 so boot times and load tons can get pretty grim or to put that differently as a player you wait for the game to boot wait for the game to load wait for the level to reload every time you die and you wait for what is euphemistically called fast travel.

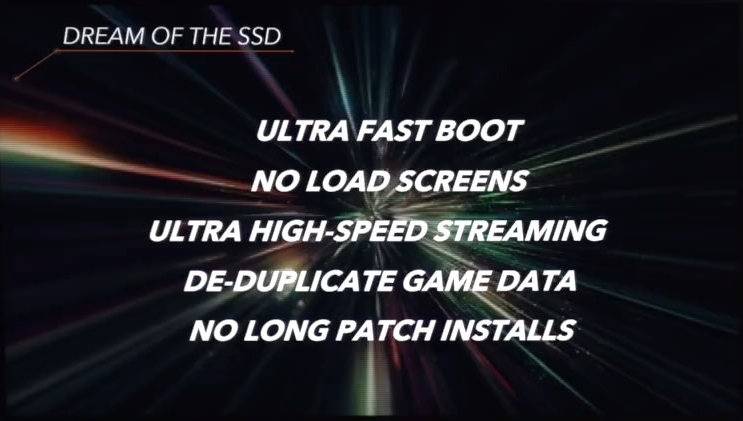

And all of that leads to the dream what if we could have not just an SSD but a blindingly fast SSD if we could load 5 gigabytes a second from it what would change.

Now SSDs are completely different from hard drives.

They don't have Seeks as such.

If you have a 5 gigabytes a second SSD you can read data from a thousand different locations in that second pretty much at speed.

As for time to load a gigabyte this is next-gen we're talking about so memory is bigger instead we should be asking how long to load 2 gigabytes.

And the answer is about a quarter of a second that's amazing we're talking two orders of magnitude meaning very roughly 100 times faster.

Which means at 5 gigabytes a second for the SSD the potential is that the game boots in a second there are no load screens the game just fades down while loading a half dozen gigabytes and fades back up again.

Same for a reload you're immediately back in the action after you die.

And fast travel becomes so fast it's blinking you miss it as game creators we go from trying to distract the player from how long fast travel is taking like those "Spider-Man" subway rides to being so blindingly fast that we might even have to slow that transition down.

Pretty cool, right? But for me this is not the primary reason to change from a hard drive to an SSD.

The primary reason for an ultra SSD is that it gives the game designer Freedom.

Or to put that differently with a hard drive the 20 seconds that it takes to load a gigabyte can sabotage the game that the developer is trying to create.

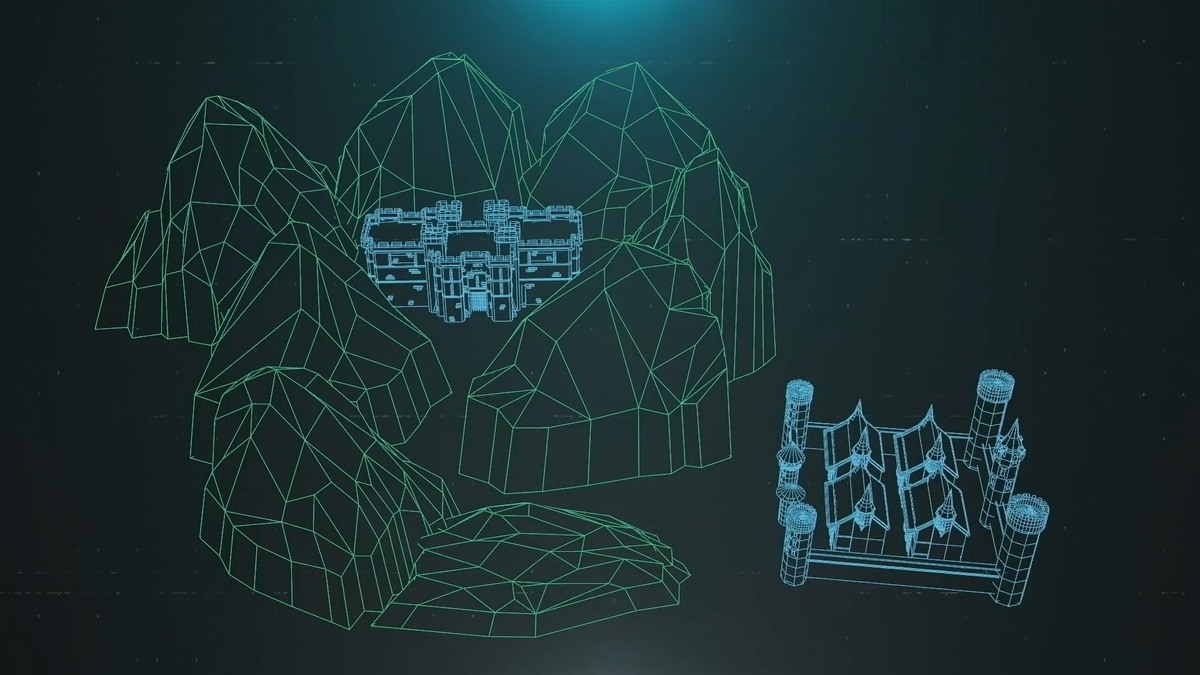

I think almost all of us in the room have experienced this maybe in different ways say we're making an adventure game and we have two rich environments where we each want enough textures and models to fill memory.

What you can do as long as you have a long staircase or elevator ride or a windy corridor where you can ditch the old assets and then take 30 seconds or so to load the new assets.

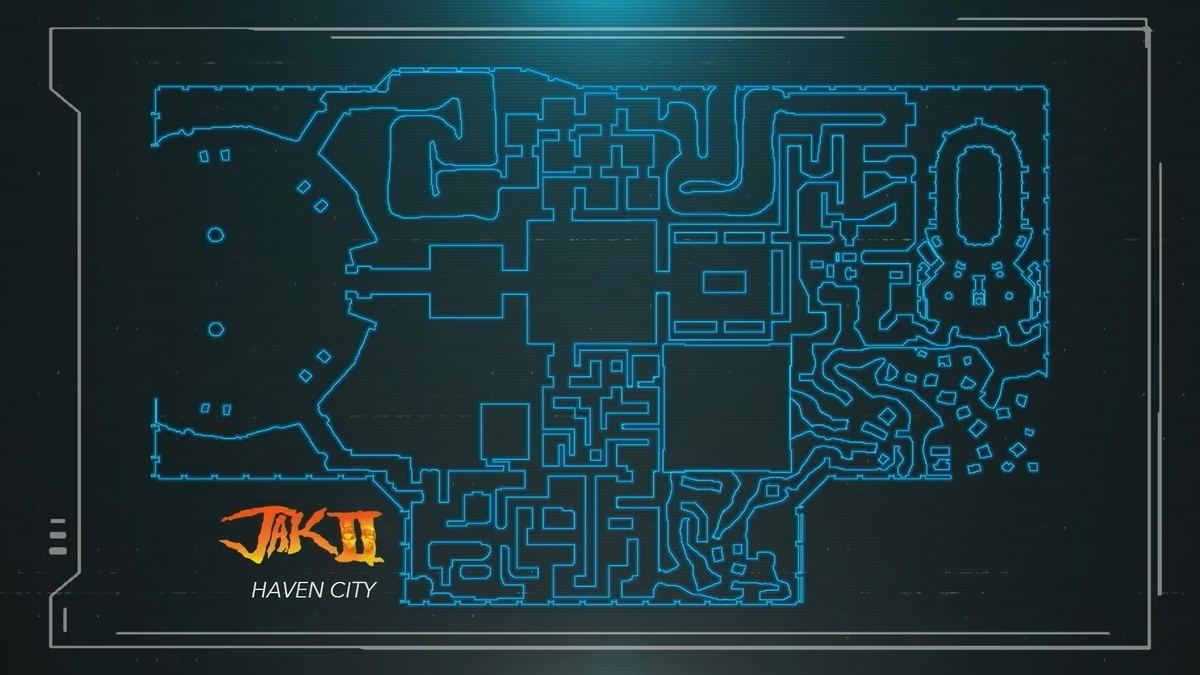

Having a 30 second elevator ride is a little extreme more realistically we'd probably chop the world into a number of smaller pieces and then do some calculations with sightlines and run speeds like we did for Haven city when we were making "JAK II" the game is 20 years old but not much has changed since then.

All those twisty passages are there for a reason.

There's a whole subset of level design dedicated to this sort of work but still it's a giant distraction for a team that just wants to make their game.

So when I talked about the dream of an SSD part of the reason for that 5 gigabytes a second target was to eliminate loads, but also part of the reason for that target was streaming as in what if the SSD is so fast that as the player is turning around. It's possible to load textures for everything behind the player in that split second.

If you figure that it takes half a second to turn that's 4GB of compressed data you can load that sounds about right for next gen.

Anyway back to the hard drive another strategy for increasing effective read speed is to make big sequential chunks of data.

For example we might group all the data together for each city block that removes most of the seeks and the streaming gets faster but there's a downside too which is that frequently used data is included in many chunks and therefore is on the hard drive many many times.

"Marvel's Spider-man" uses this strategy and though it works very well for increasing the streaming speed.

There's a massive duplication as a result some of the objects like mailboxes or newsracks are on the hard drive for hundred times.

What I'm describing here are things that cramp a creative director style either level design gets a little bit boring in places or the data is duplicated so many times that it no longer fits on the blu-ray disk and you end up with hard limits on the players run speed or driving speed the player can't go faster than the load speed from the hard drive.

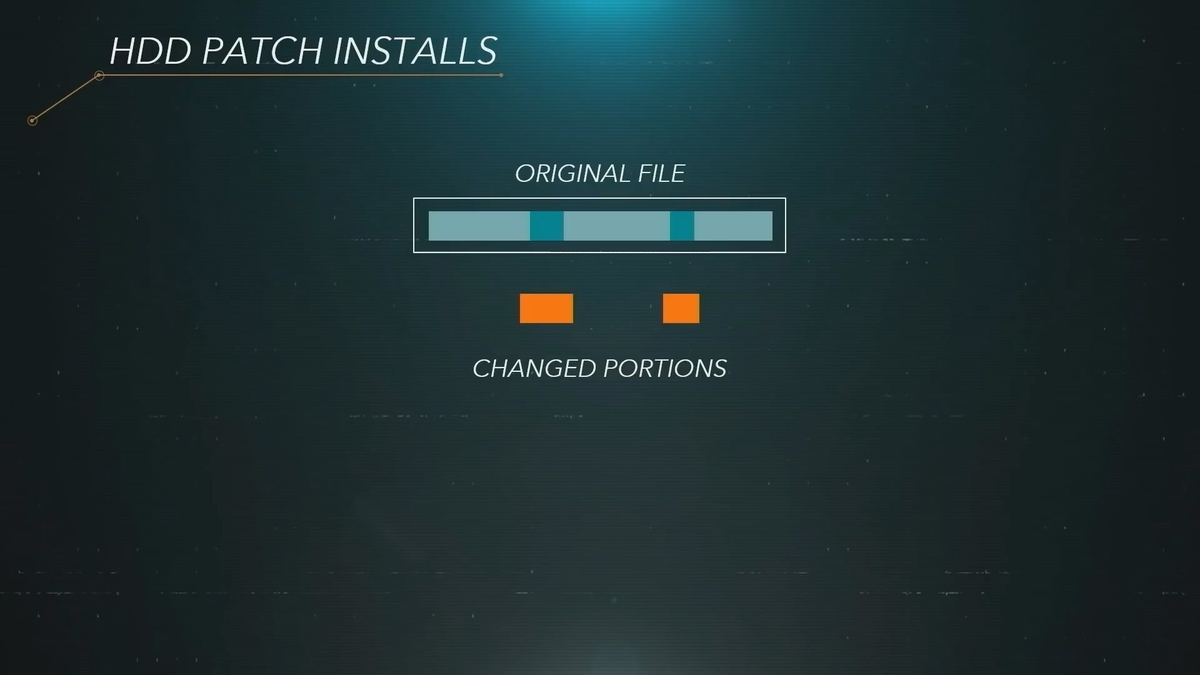

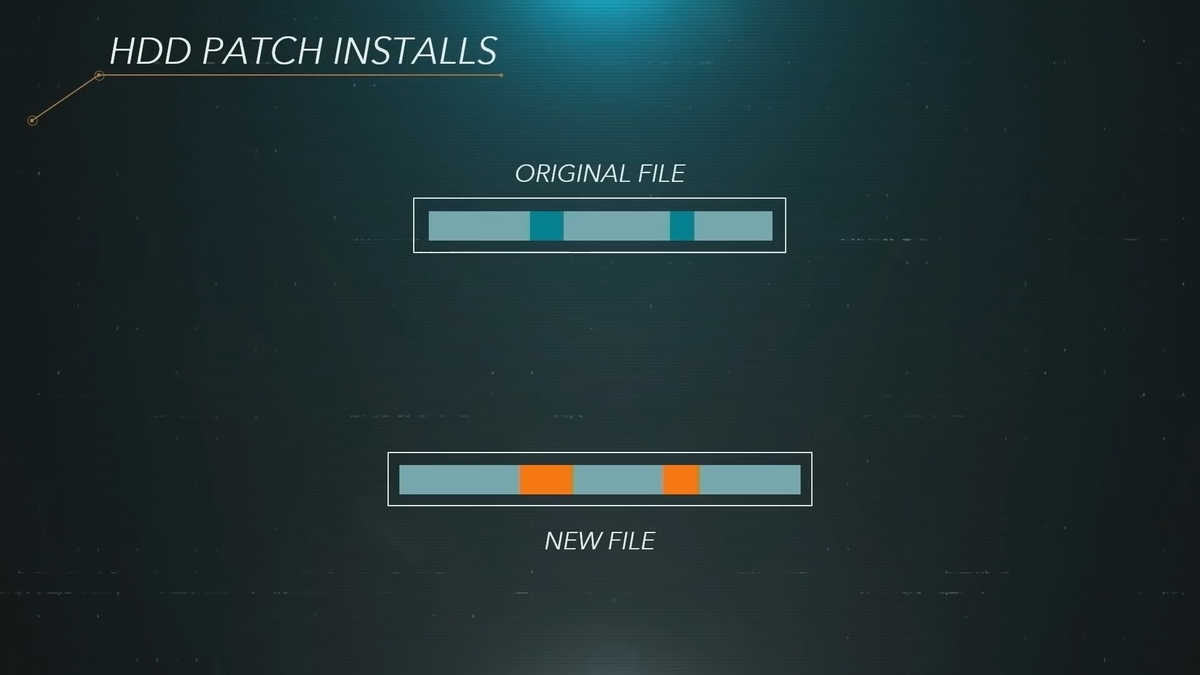

And finally I'm sure many of you have noticed that after a patch download the PlayStation 4 will sometimes take a long time to install the patch.

That's because when just part of a file has been changed the new data can be downloaded pretty quickly but before the game boots up a brand new file has to be constructed that includes the changed portion.

Otherwise every change would add a seek or two.

Even so you can occasionally see this happening on game titles they start to hitch once they get patched enough.

With an SSD though no seeks so no need to make brand new files with the changes incorporated into them which means no installs as you know them today.

There's yet one more benefit which is that system memory can be used much more efficiently.

On PlayStation 4 game data on the hard drive feels very distant and difficult to use.

By the time you realize you need a piece of data it's much too late to go out and load it so system memory has to contain all of the data that could be used in the next 30 seconds or so of gameplay.

That means a lot of the eight gigabytes of system memory is idle it's just waiting there to be potentially used.

On PlayStation 5 though the SSD is very close to being like more RAM.

Typically it's fast enough that when you realize you need a piece of data you can just load it from the SSD and use it there's no need to have lots of data parked in system memory waiting to potentially be used.

A different way of saying that is that most of Ram is working on the game's behalf.

This is one of the reasons that 16GB of GDDR6 for playstation 5 the presence of the SSD reduces the need for a massive intergenerational increase in size.

So back to the dream of the SSD here's the set of targets.

Boot the game in a second.

No load screens.

Design freedom meaning no twisty passages or long corridors.

More game on the disk and more game on the SSD.

And finally those patch installs go away.

The reality though is that the SSD is just one piece of the puzzle there's a lot of places where bottlenecks can occur in between the SSD and the game code that uses the data.

You can see this on PlayStation 4 if I use an SSD with 10 times the speed of a standard hard drive I probably see only double the loading speed if that.

For PlayStation 5 our goal was not just that the SSD itself be a hundred times faster it was that game loads and streaming would be a hundred times faster so every single potential bottleneck needed to be addressed.

And there are a lot of them.

Let's look at check-in and what happens when its overhead gets a hundred times larger. Conceptually check-in is a pretty simple process data is loaded into system memory from the hard drive or SSD. It's examined a few values are tweaked to check it in and then it's moved to its final location.

At the SSD speeds we're talking about that last part moving the data meaning copying it from one location to another takes roughly an entire next-gen CPU core.

And that's just the tip of the iceberg if all the overheads get a hundred times larger that will cripple the framerate as soon as the player moves and that massive stream of data starts coming off the SSD.

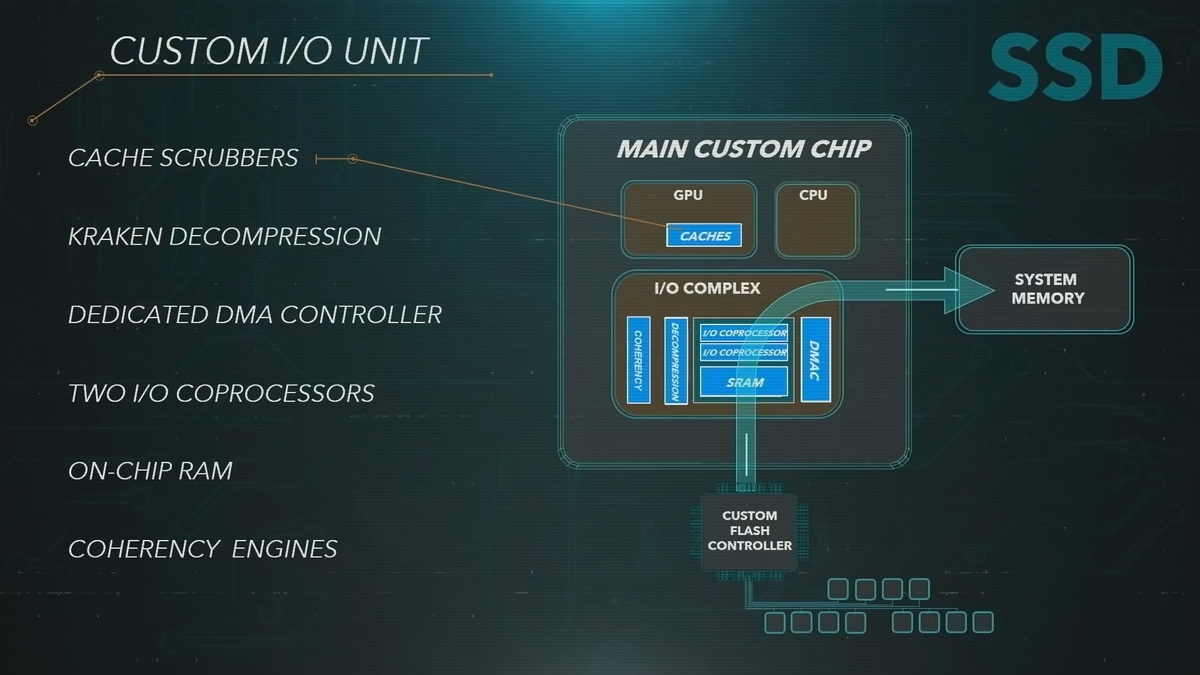

So to solve all of that we built a lot of custom hardware namely a custom flash controller and a number of custom units in our main chip.

The flash controller in the SSD was designed for smooth and bottleneck free operation but also with games in mind for example there are six levels of priority when reading from the SSD.

Priority is very important you can imagine the player heading into some new location in the world and the game requesting a few gigabytes of textures and while those textures are being loaded an enemy is shot and has to speak a few dying words.

Having multiple priority levels let's the audio for those dying words get loaded immediately.

On one side that flash controller connects to the actual flash dyes that supply of the storage to reach art bandwidth target of 5 gigabytes a second we ended up with a 12 channel interface. 8 channels wouldn't be enough.

The resulting bandwidth we have achieved is actually five-and-a-half gigabytes a second. With a 12 channel interface the most natural size that emerges for an SSD is 825 gigabytes.

The key question for us was is that enough it's tempting to add more but flash certainly doesn't come cheap and we have a responsibility to our gaming audience to be cost effective with regards to what we put in the console.

Ultimately we resolve this question by looking at the play patterns of a broad range of gamers.

We examined the specific games that they were playing over the course of a weekend or week or a month and whether that set of games would fit properly on the SSD.

We were able to establish that the friction caused by reinstalled or read downloads would be quite low and so we locked in on that 825 gigabyte size while also preparing multiple strategies so that those who want more storage can add it I'll go through the details in a moment.

Back to the flash controller on the other side it connects to our main custom chip via 4 lanes of Gen 4 PCIe and inside the main custom chip is a pretty hefty unit dedicated to I/O.

Before we talk about what that does let's talk compression for a moment.

PlayStation 4 used "Zlib" as its compression format we decided to use it again on PlayStation 5 but on my 2017 tour of developers I learned about a new format called "Kraken" from Rad Game Tools.

It's like Zlibs smarter cousin simple similar types of algorithms but about 10% better compression which is pretty big that means 10% more game on the UHD blu-ray disc or on the SSD.

Kraken had only been out for a year that it was already becoming a de-facto industry standard half of the teams I talked to or either using it or getting ready to evaluate.

It so we hustled and built a custom decompressor into the I/O unit one capable of handling over 5 gigabytes of crack and format input data a second.

After decompression that typically becomes 8 or 9 gigabytes but the unit itself is capable of outputting as much as 22 gigabytes a second if the data happened to compress particularly well.

By the way in terms of performance that custom decompressor equates to nine of our Zen2 cores that's what it would take to decompress the Kraken stream with a conventional CPU.

There's a lot more in the custom I/O unit including a dedicated DMA controller the game can direct exactly where it wants to send the data coming off of the SSD.

This equates to another Zen2 core or two in terms of its copy performance.

Its primary purpose is to remove check in as a bottleneck.

There's two dedicated I/O coprocessors in a large RAM pool these are tins in 2 cores there are there principally to direct the variety of custom hardware around them.

One of the coprocessors is dedicated to SSD I/O this lets us bypass traditional file I/O and it's bottlenecks when reading from the SSD.

The other is responsible for memory mapping which i know doesn't sound like anything related to the SSD but a lot of developers map and remap memory as part of file I/O and this too can become a bottleneck.

There are coherency engines to assist the coprocessors coherency comes up a lot in places probably the biggest coherency issue is stale data in the GPU caches.

Flushing all of the GPU caches whenever the SSD is read is an unattractive option it could really hurt the GPU performance.

So we've implemented a gentler way of doing things where the coherency engines inform the GPU of the overwritten address ranges and custom scrubbers in several dozen GPU caches do pinpoint evictions of just those address ranges.

The best thing is as a game developer when you read from the SSD you don't need to know any of this you don't even need to know that your data is compressed.

You just indicate what data you'd like to read from your original uncompressed file and where you'd like to put it and the whole process of loading it happens invisibly to you and at very high speed.

Back to the dream thanks to all of that surrounding hard our 5.5 gigabytes a second really should translate into something like a hundred times faster I/O than PS4 and allow the dream of no load screens and superfast streaming to become a reality.

Having said that expandability of our SSD is going to be quite important flash is costly and you may very well want to add storage to whatever we put in the console.

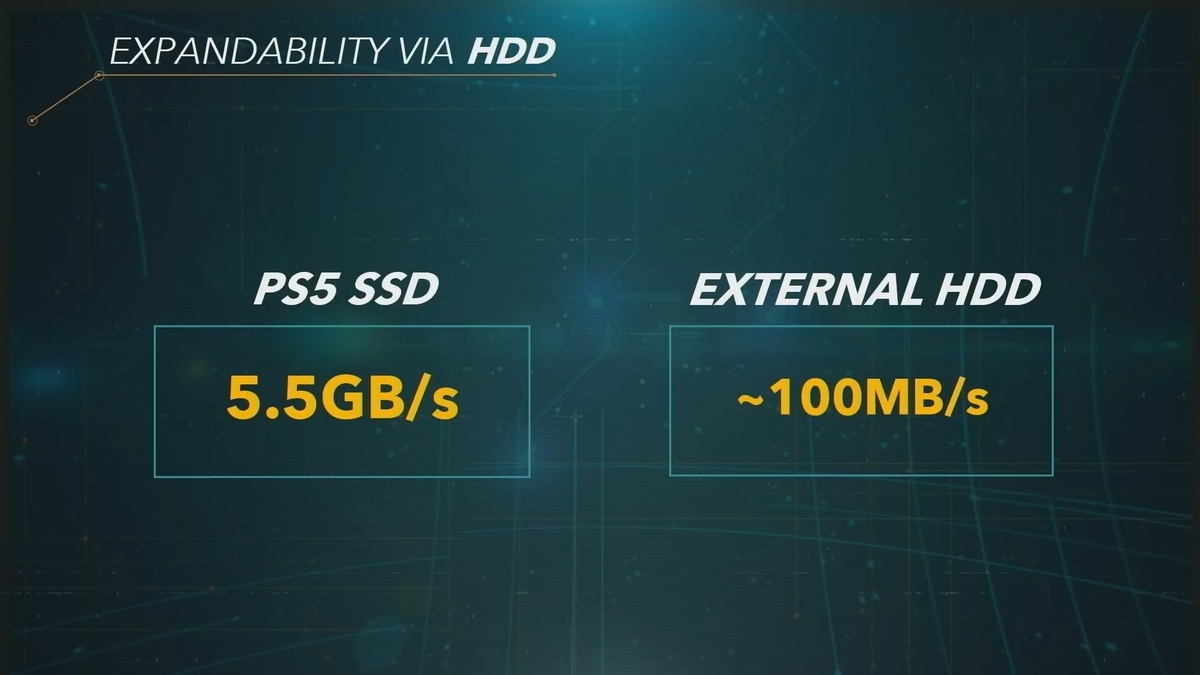

Now the kind of storage you need depends on how you're going to use it if you have an extensive PlayStation 4 library and you'd like to take advantage of backwards compatibility to play those games on PlayStation 5 then a large external hard drive is ideal.

You can leave your games on the hard drive and play them directly from there thus saving the pricier SSD storage for your PlayStation 5 titles or you can copy your active PlayStation 4 titles to the SSD.

If your purpose in adding more storage is to play PlayStation 5 titles though ideally you would add to your SSD storage.

We will be supporting certain M.2 SSDs.

These are internal drives that you can get on the open market and install in a bay in the PlayStation 5.

As for which ones we support and when I'll get to that in a moment.

They connect through the custom I/O unit just like our SSD does.

So they can take full advantage of the decompression I/O coprocessors and all the other features I was talking about.

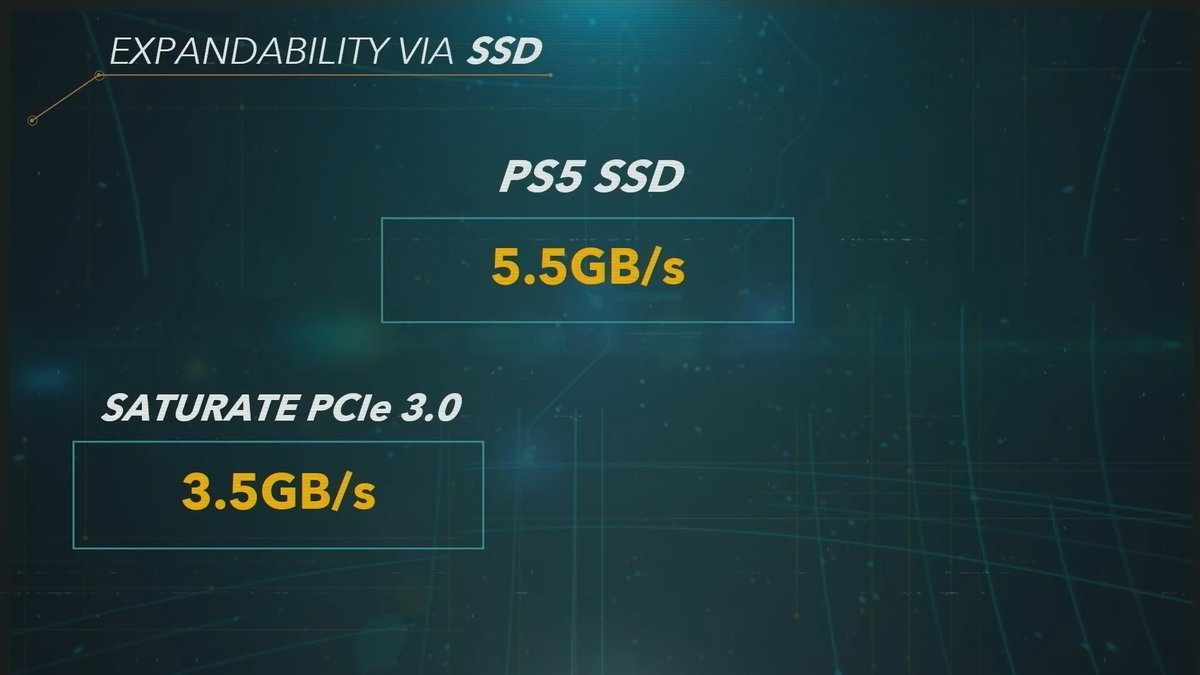

Here's the catch though that commercial drive has to be at least as fast as ours games that rely on the speed of our SSD need to work flawlessly with M.2 drive.

When I gave the Wired interview last year I said that the PlayStation 5 SSD was faster than anything available on PC.

At the time commercial M.2 drives used PCIe 3.0 and 4 lanes of that cap out at 3.5 gigabytes a second.

In other words no PCIe 3.0 Drive can hit the required spec.

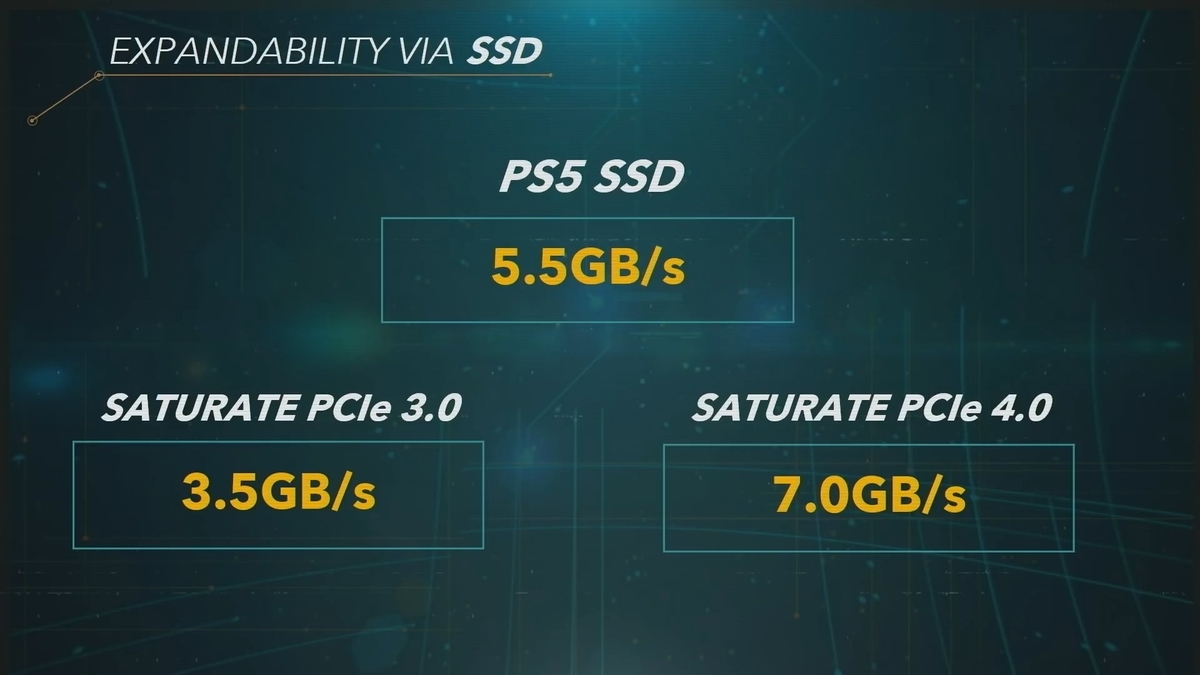

M.2 drives with PCIe 4.0 or now out in the market we're getting our in samples and seeing 4 or 5 gigabytes a second from them.

By year's end I expect there will be drives that saturate 4.0 and support seven gigabytes a second having said that we are comparing apples and oranges though because that commercial M.2 Drive will have its own architecture its own flash controller and so on.

For example the NVMe specification lays out a priority scheme for requests that the M.2 drives can use.

And that scheme is pretty nice but it only has two true priority levels our drive supports six.

We can hook up a drive with only two priority levels definitely but our custom I/O unit has to arbitrate the extra priorities rather than the M.2 drives flash controller and so the M.2 drive needs a little extra speed to take care of issues arising from the different approach.

That Commercial Drive also needs to physically fit inside of the bay we created in PlayStation 5 for M.2 drives.

Unlike internal hard drives there's unfortunately no standard for the height of an M.2 Drive and some M.2 drives have giant heat sinks in fact some of them even have their own fans.

Right now we're getting em to drive samples and benchmarking them in various ways when games hit in beta as they get ready for the PlayStation 5 launch at year-end we'll also be doing some compatibility testing to make sure that the architecture of particular M.2 drives isn't too foreign for the games to handle.

Once we've done that compatibility testing we should be able to start letting you know which drives will physically fit and which drive samples have benchmark appropriately high in our testing.

It would be great if that happened by launch but it's likely to be a bit past it so please hold off on getting that M.2 drive until you hear from us.

Ok back to our principles.

Balancing evolution and revolution is the second of them.

This was definitely a recurring theme with the GPU.

We need new GPU features and capabilities if if we only have more performance it's not really a new generation of console of course many of these capabilities result in more performance that's part of why a Playstation 5 teraflop is more powerful than a Playstation 4 Teraflop.

But we aren't just looking for the performance we also need the ability to do something with the GPU that could not have been done before.

And we need higher performance per watt every time we double the performance of some GPU component we don't want to find we've doubled the power consumed and the heat produced.

But at the same time we have to make sure the GPU can run PS4 games and we have to ensure that the architecture is easy for the developers to adopt.

Now backwards compatibility was handled masterfully by AMD they treated it as a key need throughout the design process.

As our solution to adding new features without blindsiding developers we made sure that if there were new significant features it would be optional to use them.

The GPU supports ray-tracing but you don't have to use ray-tracing to make your game. The GPU supports primitive shaders but you can release your first game on PlayStation 5 without making any use of them.

Before I get into the capabilities of the GPU I'd like to make clear two points that can be quite confusing.

First we have a custom AMD GPU based on there "RDNA2" technology what does that mean AMD is continuously improving and revising their tech for RDNA2 their goals were roughly speaking to reduce power of consumption by rhe architecting the GPU to put data close to where it's needed to optimize the GPU for performance and to adding new more advanced feature set.

But that feature set is malleable which is to say that we have our own needs for PlayStation and that can factor into what the AMD roadmap becomes.

So collaboration is born.

If we bring concepts to AMD that are felt to be widely useful then they can be adopted into RDNA - and used broadly including in PC GPUs.

If the ideas are sufficiently specific to what we're trying to accomplish like the GPU cache scrubbers I was talking about then they end up being just for us.

If you see a similar discrete GPU available as a PC card at roughly the same time as we release our console that means our collaboration with AMD succeeded.

In producing technology useful in both worlds it doesn't mean that we as sony simply incorporated the pc part into our console.

This continuous improvement in AMD technology means it's dangerous to rely on teraflops as an absolute indicator of performance.

And see you count should be avoided as well.

In the case of CPUs we all understand this the PlayStation 4 and PlayStation 5 each have eight CPUs but we never think that meant the capabilities and performance are equal.

It's the same for see use for one thing they've been getting much larger over time adding new features means adding lots of transistors.

In fact the transistor count for a Playstation 5 CU is 62% larger than the transistor count for a playstation 4 CU.

Second the PlayStation 5 and GPU is backwards compatible with PlayStation 4.

What does that mean one.

Way you can achieve backwards compatibility is to put the previous consoles chipset in the new console like we did with some PlayStation 3s.

But that's of course extremely expensive.

A better way is to incorporate any differences in the previous consoles logic into the new consoles custom chips.

Meaning that even as the technology evolves the logic and feature set that PlayStation 4 and PlayStation 4 Pro titles rely on is still available in backwards compatibility modes.

One advantage of this strategy is that once backwards compatibility is in the console it's in.

If not as if a cost down will remove backwards compatibility like it did on PlayStation 3. Achieving this unification of functionality took years of efforts by AMD as any roadmap advancement creates a potential divergence in logic. Running PS4 and PS4 titles at boosted frequencies has also added complexity the boost is truly massive this time around and some game code just can't handle it testing has to be done on the title by title basis.

Results are excellent though we recently took a look at the top hundred PlayStation 4 titles as ranked by play time and we're expecting almost all of them to be playable at launch on playstation 5.

With regards to new features as I said our strategy was to try to break new ground but at the same time not to require use of the new GPU capabilities.

For more than a decade GPUs have imposed a restriction on game engines.

Software handles vertex processing but for the most part dedicated hardware responsible for the triangles and other geometry that the vertices form.

That means it's not possible to do even basic optimizations such as aborting processing of a vertex if all geometry that uses it is off screen.

PlayStation 5 has a new unit called the Geometry Engine which brings handling of triangles and other primitives under full programmatic control.

As a game developer you're free to ignore its existence and use the PlayStation 5 GPU as if it were no more capable than the PS4 GPU or you can use this new intelligence in various ways.

Simple usage could be performance optimizations such as removing back faced or off-screen vertices and triangles.

More complex usage involves something called primitive shaders which allow the game to synthesize geometry on-the-fly as it's being rendered.

It's a brand new capability.

Using primitive shaders on PlayStation 5 will allow for a broad variety of techniques including smoothly varying level of detail addition of procedural detail to close up objects and improvements to particle effects and other visual special effects.

Another major new feature of our custom RDNA2 based GPU is Ray-Tracing.

Using the same strategy as AMD's upcoming PC GPUs.

The CUs contain a new specialized unit called the Intersection Engine which can calculate the intersection of rays with boxes and triangles.

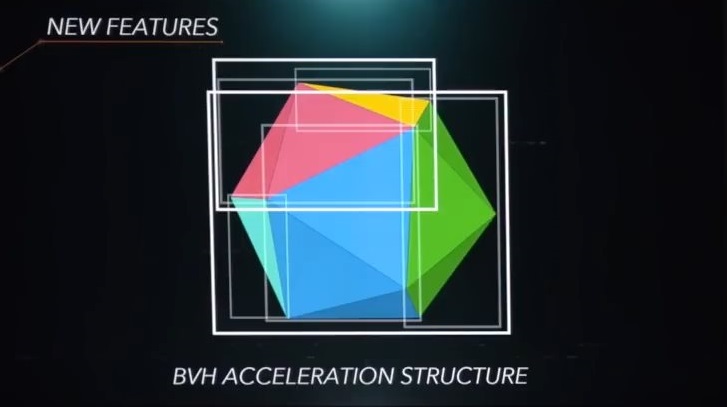

To use the Intersection Engine first you build what is called an acceleration structure.

Its data in RAM that contains all of your geometry.

There's a specific set of formats you can use their variations on the same BVH concept. Then in your shader program you use a new instruction that asks the intersection engine to check array against the BVH.

While the Intersection Engine is processing the requested ray triangle or ray box intersections the shaders are free to do other work.

Having said that the Ray-Tracing instruction is pretty memory intensive so it's a good mix with logic heavy code.

There's of course no need to use Ray-Tracing PS4 graphics engines will run just fine on PlayStation 5.

But it presents an opportunity for those interested.

I'm thinking it'll take less than a million rays a second to have a big impact on audio that should be enough for audio Occlusion and some Reverb calculations.

With a bit more of the GPU invested in Ray-Tracing it should be possible to do some very nice Global Illumination.

Having said that adding Ray-Traced shadows and reflections to a traditional graphics engine could easily take hundreds of millions of rays a second and full Ray-Tracing could take billions.

How far can we go I'm starting to get quite bullish I've already seen a PlayStation 5 title that's successfully using Ray-Tracing based Reflections in complex animated scenes with only modest costs.

Another set of issues for the GPU involved size and frequency.

How big do we make the GPU and what frequency do we run it that.

At this is a balancing act the chip has a cost and there's a cost for whatever we use to supply that chip with power and to cool it.

In general I like running the GPU at a higher frequency. Let me show you why.

Here's two possible configurations for a GPU roughly of the level of the PlayStation 4 Pro. This is a thought experiment don't take these configurations too seriously.

If you just calculate teraflops you get the same number, but actually the performance is noticeably different because teraflops is defined as the computational capability of the vector ALU.

That's just one part of the GPU there are a lot of other units and those other units all run faster when the GPU frequency is higher at 33% higher frequency rasterization goes 33% faster processing the command buffer goes that much faster the 2 and other caches have that much higher bandwidth and so on.

About the only downside is that system memory is 33% further away in terms of cycles. But the large number of benefits more than counterbalanced that.

As a friend of mine says a rising tide lifts all boats.

Also it's easier to fully use 36CUs in parallel than it is to fully use 48CUs when triangles are small it's much harder to fill although CUs with useful work.

So there's a lot to be said for faster assuming you can handle the resulting power and heat issues which frankly we haven't always done the best Java.

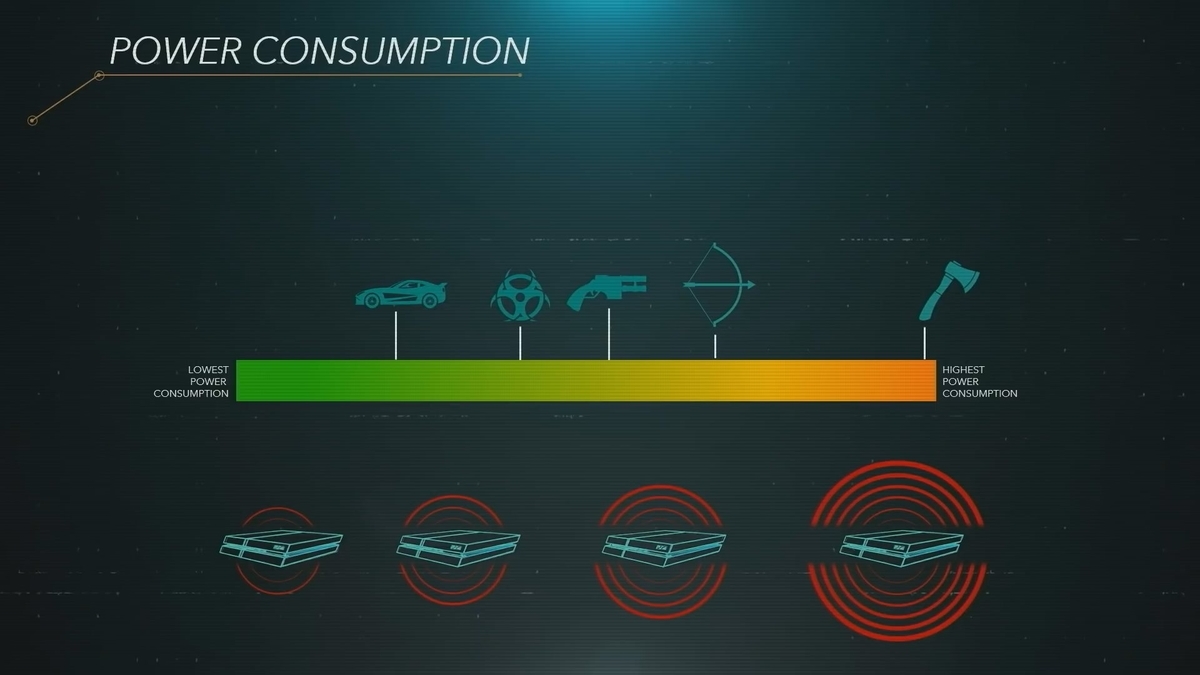

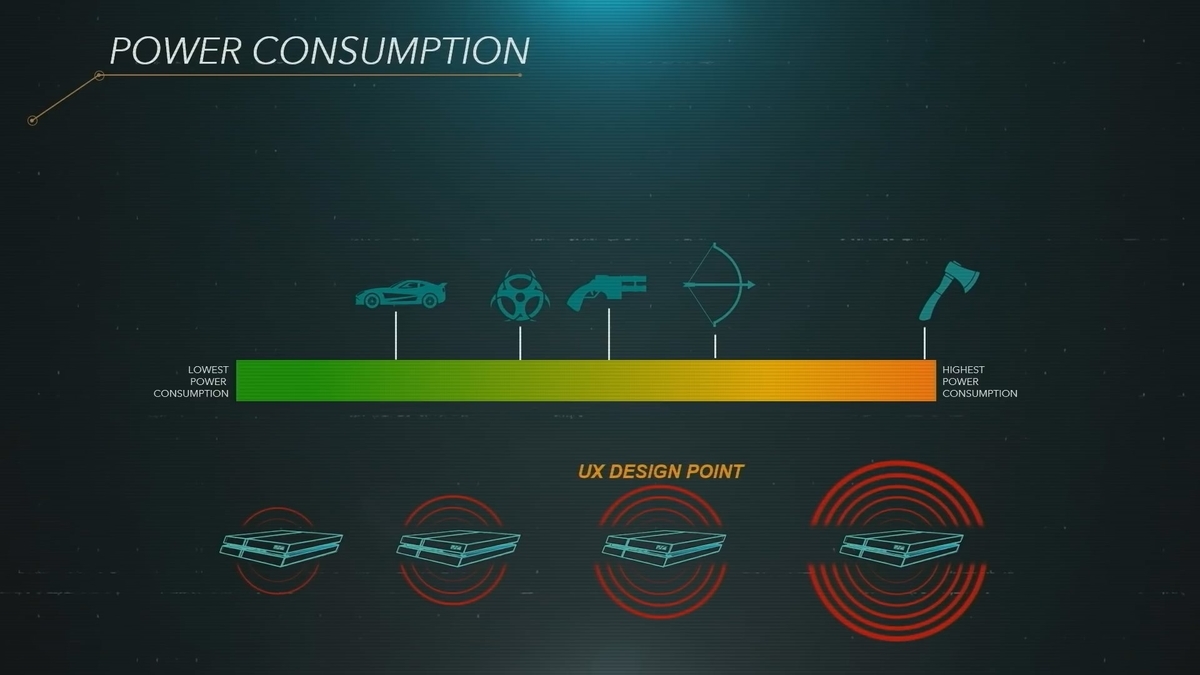

Part of the reason for that is historically our process for setting CPU and GPU frequencies has relied on some heavy duty guesswork with regards to how much electrical power games will consume and how much heat will be produced as a result inside of the console.

Power consumption varies a lot from game to game.

When I play "God of War" on my PS4 Pro I know the power consumption is high just by the fan noise.

But power isn't simply about engine quality.

It's about the minutiae of what's being displayed and how.

It's counterintuitive but processing dense geometry typically consumes less power than processing simple geometry which is I suspect why "Horizon"s map screen with its low triangle count makes my PS4 Pro heat up so much.

Our process on previous consoles has been to try to guess what the maximum power consumption during the entire console lifetime might be which is to say the worst case scene in the worst case game and prepare a cooling solution that we think will be quiet at that power level.

If we get it right fan noise is minimal if we get it wrong the console will be quite loud for the higher power games and there's even a chance that it might overheat and shut down if we miss estimate power too badly.

PlayStation 5 is especially challenging because the CPU supports 256 bit native instructions that consume a lot of power.

These are great here and there but presumably only minimally used or are they if we plan for major 256 bit instruction usage we need to set the CPU clock substantially lower or noticeably increase the size of the power supply and fan.

So after much discussion we decided to go with a very different direction on PlayStation 5.

We built a GPU with 36CUs mind you RDNA2 CUs are large each has 62% more transistors than the CUs we were using on PlayStation 4.

So if we compare transistor counts 36 RDNA2 CUs equates to roughly 58 PlayStation 4 CUs.

It is a fairly sizeable GPU.

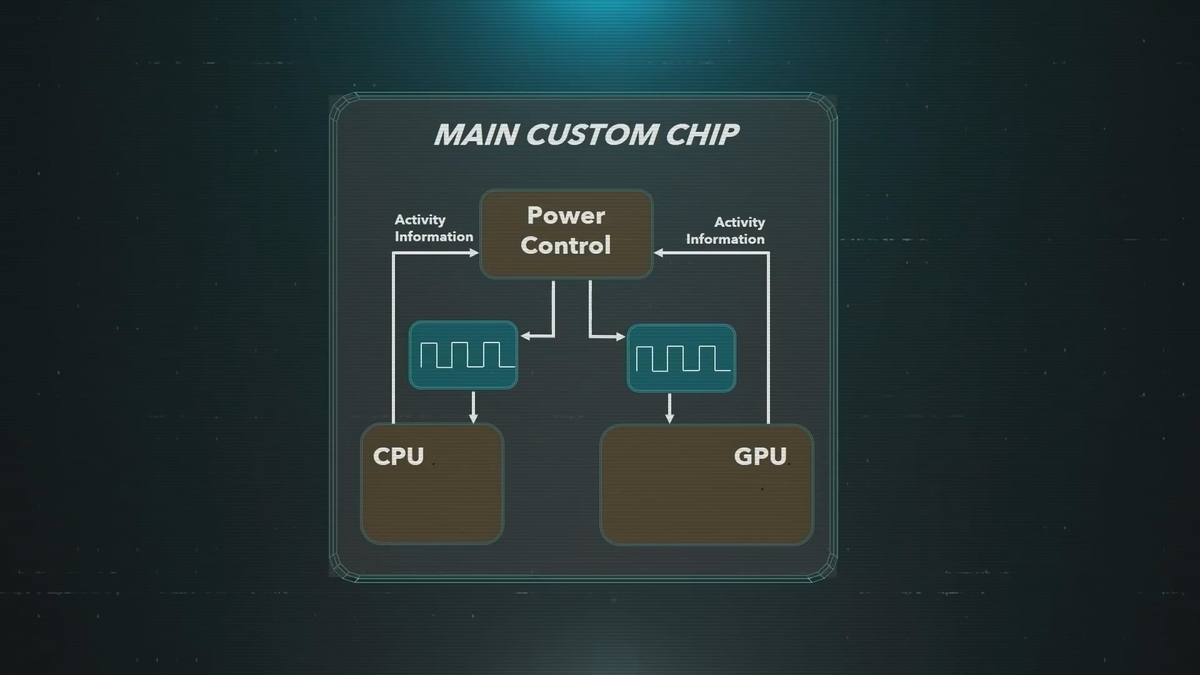

Then we went with a Variable Frequency Strategy for PlayStation 5 which is to say we continuously run the GPU and CPU in boost mode.

We supply a generous amount of electrical power and then increase the frequency of GPU and CPU until they reach the capabilities of the system's cooling solution.

It's a completely different paradigm rather than running at constant frequency and letting power vary based on the workload we run at essentially constant power and let the frequency band vary based on the workload.

We then tackled the engineering challenge of a cost-effective and high-performance cooling solution designed for that specific power level.

In some ways it becomes a simpler problem because there are no more unknowns there's no need to guess what power consumption the worst case game might have.

As for the details of the cooling solution we're saving them for our "Teardown" I think you'll be quite happy with what the engineering team came up with.

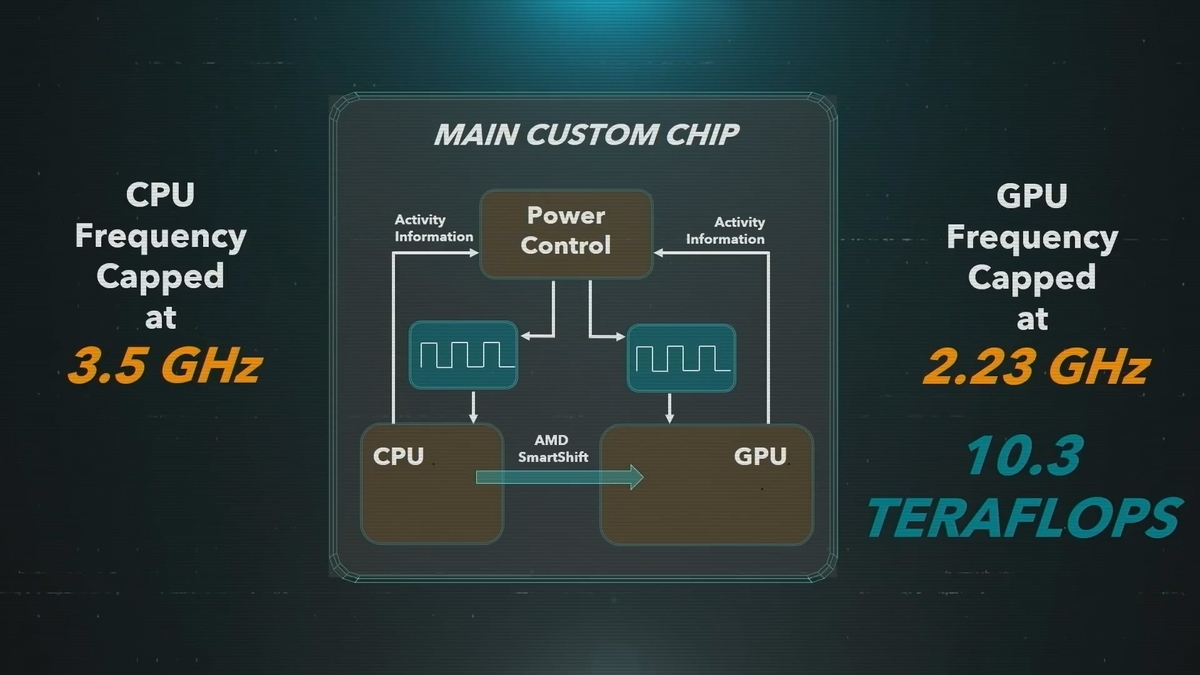

So how fast can we run the GPU and CPU with this strategy.

The simplest approach would be to look at the actual temperature of the silicon die and throttle the frequency on that basis.

But that won't work it fails to create a consistent PlayStation 5 experience it wouldn't do to run a console slower simply because it was in a hot room.

So rather than look at the actual temperature of the silicon die we look at the activities that the GPU and CPU are performing and set the frequencies on that basis which makes everything deterministic and repeatable.

While we're at it we also use AMD's "Smart Shift" Technology and send any unused power from the CPU to the GPU so it can squeeze out a few more pixels.

The benefits of this strategy are quite large.

Running a GPU at 2 GHz was looking like an unreachable target with the old fixed frequency strategy.

With this new paradigm we're able to run way over that in fact we have to cap the GPU frequency at 2.23 GHz so that we can guarantee that the on chip logic operates properly.

36CUs at 2.23 GHz is 10.3 Teraflops and we expect the GPU to spend most of its time at or close to that frequency and performance.

Similarly running the CPU at 3 GHz was causing headaches with the old strategy. But now we can run it as high as 3.5 GHz.

In fact it spends most of its time at that frequency.

That doesn't mean all games will be running in 2.23 GHz and 3.5 GHz when that worst case game arrives it will run at a lower clock speed but not too much lower to reduce power by 10% it only takes a couple of percent reduction in frequency.

So I'd expect any down clocking to be pretty minor.

All things considered the change to a variable frequency approach will show significant gains for PlayStation gamers.

The final of our three principals was about finding new dreams.

It's important for us on the hardware team to find new ways to expand or deepen gaming and that's what led us to a focus on 3D Audio.

As players we experience the game through the visuals through audio and through the feedback we received from the controller such as Rumble or haptics.

Personally I feel a game is just dead without audio.

Visuals are of course important but the impact of audio is huge as well.

At the same time the audio team on a game project has to do a lot with a little.

Fr example on PlayStation 4 there's fierce competition for the Jaguar CPU cores audio typically ends up getting just a fraction of a core.

That's not much of a computational resource particularly when you consider that the visuals run at 30 or 60 frames a second but audio processing needs to happen at almost 200 times a second.

So it's been tough going making forward progress on audio with Playstation 4 particularly when PlayStation 3 was such a beast when it came to audio.

The SPU's and Cell were almost a perfect device for audio rendering.

Simple pipeline algorithms could really take advantage of asynchronous DMA and frequently reached a hundred percent utilization of the floating-point unit.

There's unfortunately nothing comparable on PlayStation 4.

Probably the most dramatic progress in the PlayStation 4 generation has been with Virtual Reality the PSVR hard has its own audio unit.

It supports about fifty pretty decent 3D sound sources and this provided a hint as to where we could go with audio as well as some valuable experience.

Not to oversimplify but here were our goals for audio on PlayStation 5.

The first goal was great audio for everyone.

Not just VR users or sound bar owners or headphone users.

That meant audio had to be part of the console it couldn't be a peripheral.

The second goal was to support hundreds of sound sources. We didn't want developers to have to pick and choose what sounds would get 3D effects and which wouldn't we wanted every sound in the game to have dimensionality.

And finally we wanted to really take on the challenges of Presence and Locality.

Now when we say Presence we mean the feeling that you're actually there you've entered the Matrix.

It's not of course something we thought we could perfectly achieve but the idea was that if we stopped using just a rain sound and instead use lots of 3D audio sources for raindrops hitting the ground at all sorts of locations around you then at some point your brain would take a leap and you'd begin to have this feeling.

This feeling of real Presence inside the virtual world of the game.

This has the capacity to affect your appreciation of the game just like music in a game does.

The concept of Locality is simpler it's just your sense of where the audio is coming from. To the right of you behind you above you this can immerse you further in the game and it can also directly enhance the gameplay.

To use "Dead Space" as an example I know old school you're fighting enemies in fairly dark spooky locations.

Back in the day if you played the game using the TV speakers you could tell that there was one last enemy growling and hunting you down but it was difficult to tell quite where that enemy was.

With headphones you can tell that the enemy was somewhere on the right which lets you deduce if you couldn't see it that it must be somewhere behind and to your right.

But with 3D Audio with good Locality the idea is you know the enemy is precisely there and you turn and you take it out.

So how do we know where a sound is coming from in the first place.

Well all those bumps and folds in the ear have a meaning evolutionarily speaking based on what direction the sound is coming from sound waves bounce around inside the ear there's some constructive and destructive interference and the result is a change in volume.

The phase of the sound also shifts depending on what path the sound wave took to reach the ear canal.

These volume changes and phase shifts are different for each direction and also vary depending on the frequency of the sound.

Head size and head shape also impact the sound in a similar fashion.

The way that the sound changes based on direction and frequency can be encoded in a table called the Head Related Transfer Function or "HRTF".

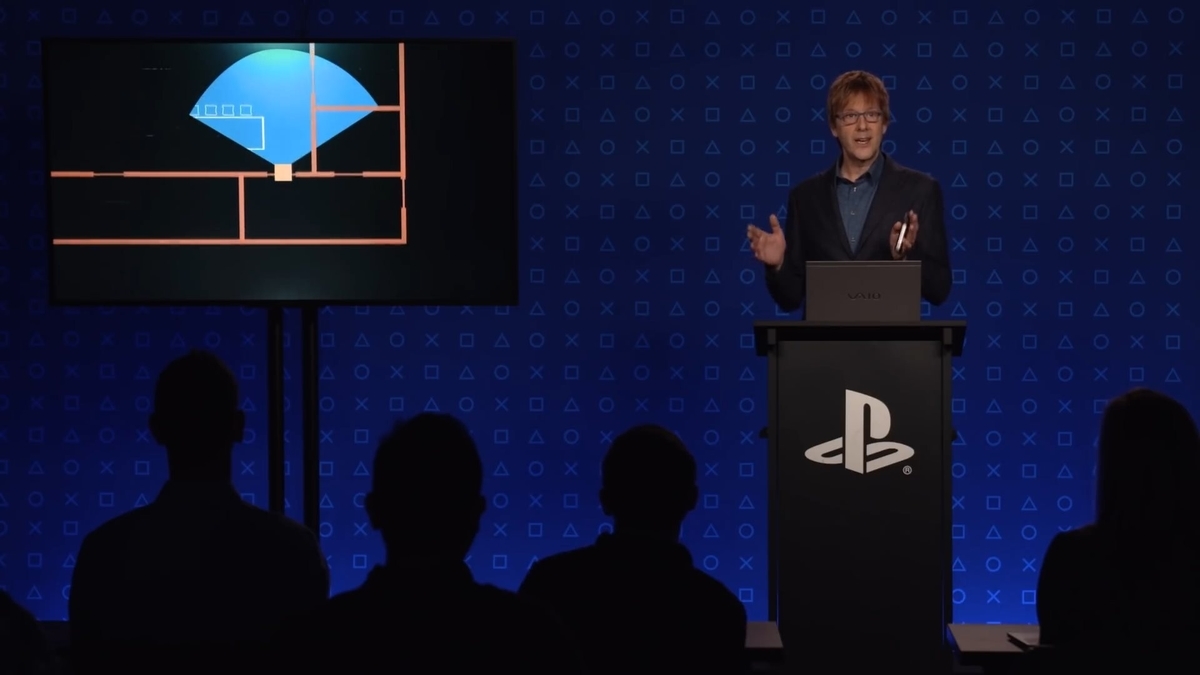

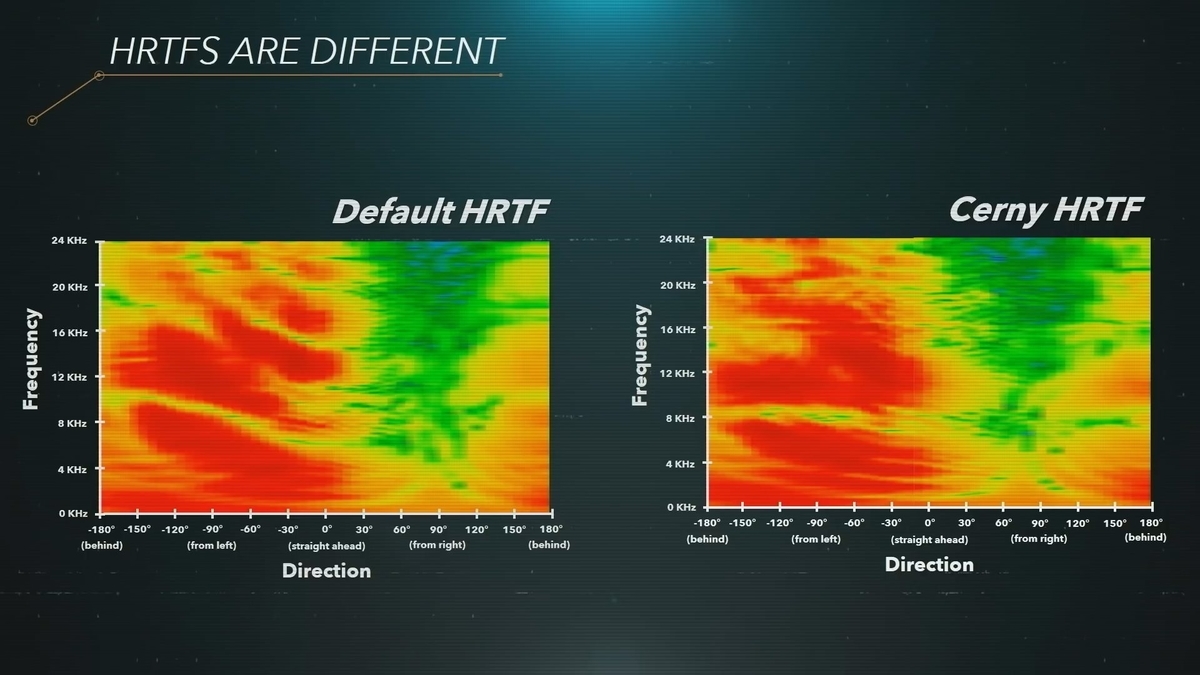

Here's part of one.

The vertical axis is the frequency the horizontal axis is the direction front back left right and the color gives the degree of attenuation of the sound at that frequency.

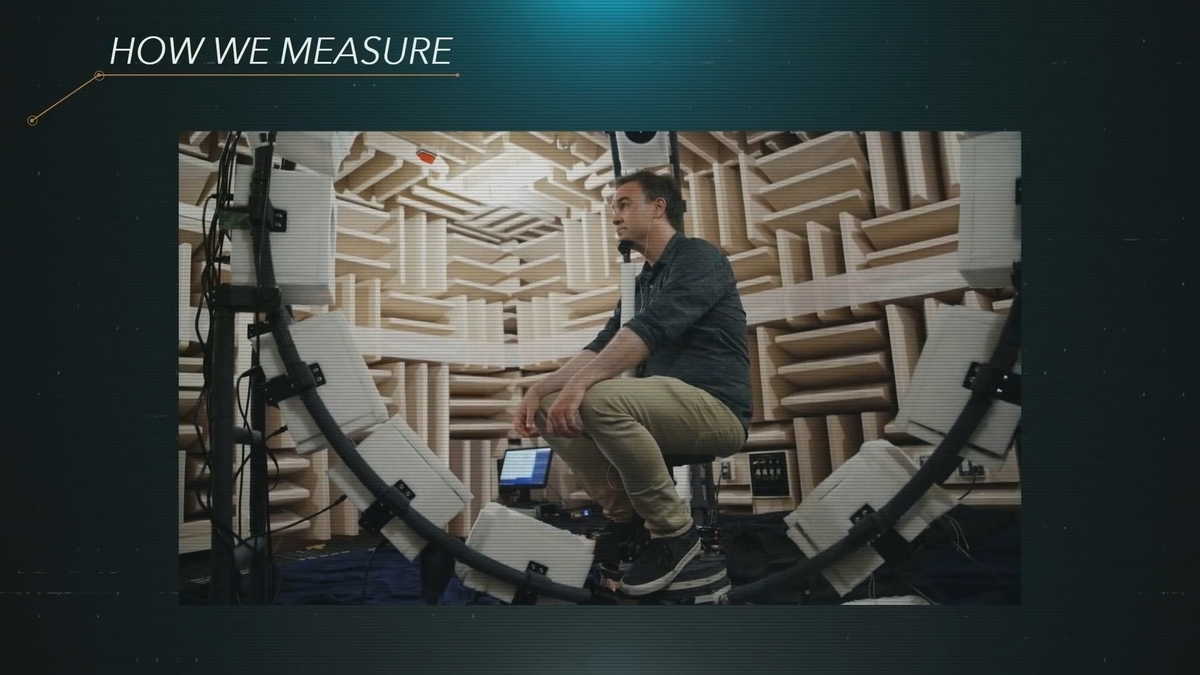

The HRTF is as unique to an individual as a fingerprint is in fact you're looking at mine right now. Here is how we measure in HRTF we've taken hundreds of people through this process.

We put a microphone in the subjects left and right ear canals and then sit the subject down in the middle of an array of 22 speakers.

We then play an audio sweep from each speaker as we rotate the subject.

In the course of ten or twenty minutes we're able to sample the HRTF at over 1,000 locations.

Using an HRTF when rendering audio creates unparalleled quality but it's computationally expensive.

The simplest way to use an HRTF is to process a sound source to make it appear as if it's coming from one of those thousand locations we sample.

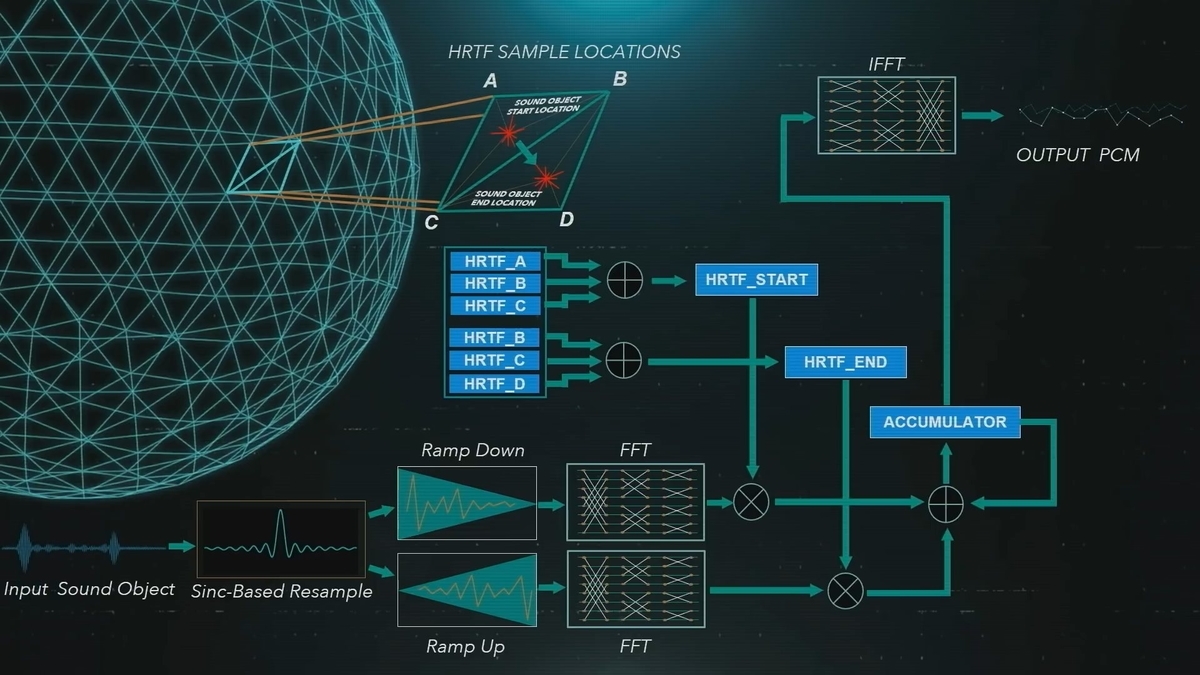

Unfortunately the processing has to be done in frequency domain rather than time domain so there's multiple fast Fourier transforms needed for every sound source for every audio tech.

That's a lot of multiplies.

This computational complexity was the determining factor for our strategy in went we had to bite the bullet and design and build a custom hardware unit for 3d audio.

Collectively we're referring to the hardware unit and the proprietary algorithms we run on it "Tempest 3D Audio Tech."

The meaning of 3D Audio and Technology should be pretty obvious here as for Tempest I feel it really reflects our goals with audio it suggests a certain intensity of experience and also hints at your presence within it.

We're calling the hardware unit that we built the Tempest Engine.

It's based on AMD's GPU technology we modified a compute unit in such a way as to make it very close to the SPU is in PlayStation 3.

Remember when I said that they were ideal for audio so the Tempest Engine has no caches just like an SPU.

All data access is via DMA just like an SPU.

Our target was that it would have more power than a CPU thanks to the parallelism that a GPU can achieve and then it would be more efficient than our GPU thanks to the SPU like architecture.

The goal being to make possible near 100% utilization of the CUs vector units.

Where we ended up is a unit with roughly the same SIMD power and bandwidth as all 8 Jaguar cores in the PlayStation 4 combined.

If we were to use the same algorithms as PSVR that's enough for something like 5,000 sound sources.

But of course we want to use more complex algorithms and we don't need anything like that number of sounds.

It would have been wonderful if a simpler strategy such as using Dolby Atmos peripherals could have achieved our goals but we wanted 3D Audio for all not just those with licensed sound bars or the like.

Also we wanted many hundreds of sound sources not just the 32 that at most supports and finally we wanted to be able to throw an overwhelming amount of processing power at the problem and it wasn't clear what any peripheral might have inside of it.

In fact with the Tempest Engine we've even got enough power that we can allocate some to the games to the extent that games want to make use of Convolution Reverb and other algorithms that are either computationally expensive or need high bandwidth.

But the primary purpose of the Tempest Engine remains 3D Audio.

Now 3D Audio is a major academic research topic it's safe to say that no one in the world all of the answers.

And the set of algorithms that has to be invented tuned or implemented to realize our vision for 3D Audio is immense.

For example use of HRTFs in games is quite complex before I talked about making a sound source appear as if it's coming from one of those thousand HRTF sample locations.

But for high quality 3D game audio we have to handle other possibilities.

The sound source might not be at one of the thousand HRTF sample locations so we have to blend the HRTF data from the closest locations that we have sampled.

The sound source might be moving which needs very special handling as that blend keeps changing and that can cause phase artifacts in the resulting audio.

Or the sound source might have a size to it meaning it should feel as if it's coming from an area rather than a single point.

There's also whole categories of approaches to be handled 3D Audio can be implemented using individual processing of 3D sound sources but alternatively "Ambisonics" can be used for 3D Audio.

Ambisonics speak somewhat like the spherical harmonics used in computer graphics.

And finally there's audio devices the player might be using headphones or TV speakers or have a higher end surround sound set up with six or more speakers all of which need different approaches.

That's a lot of variations. It's nice to have the computational resources of the Tempest Engine but it's clear that achieving our ultimate goals with 3D Audio is going to be a multi-year step-by-step process.

Having said that headphone audio implementation is largely complete at this time.

It was a natural place for us to start with headphones we control exactly what each ear hears and therefore the algorithmic development and implementation are more straightforward.

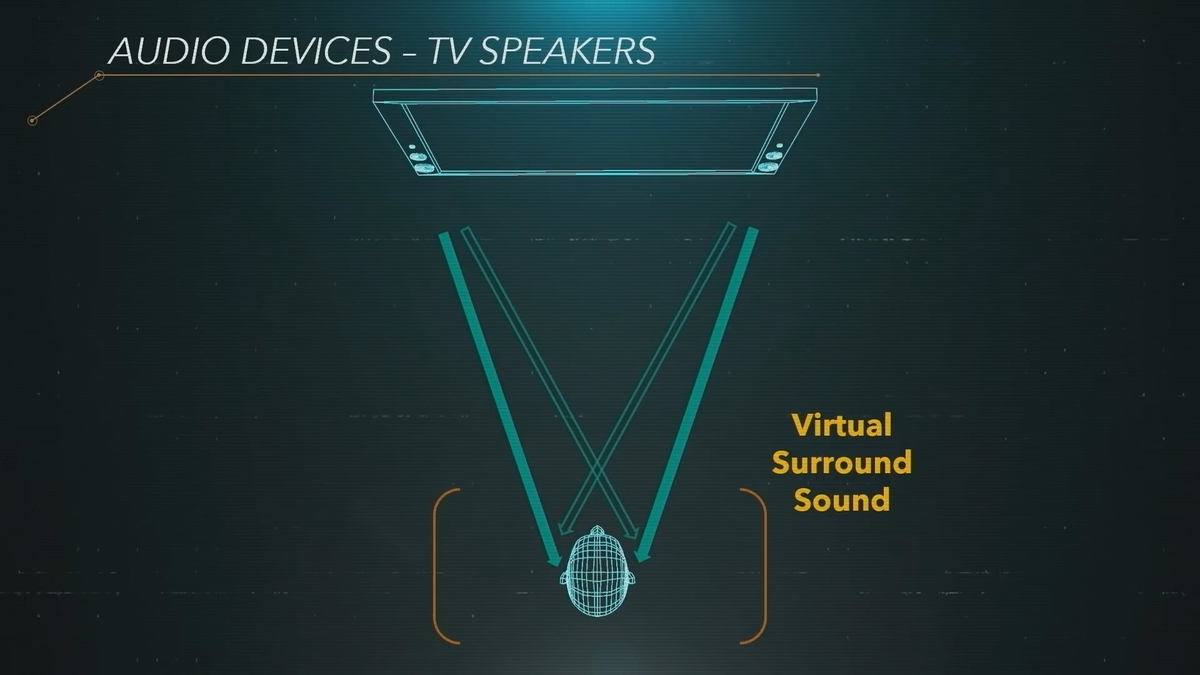

For TV speakers and stereo speakers were in the process of implementing virtual surround sound.

The idea being that if you're sitting in a sweet spot in front of the TV then the sound can be made to feel as if it's coming from any direction even behind you.

Virtual surround sound has a lot in common with 3D Audio on headphones but it's more complex because the left ear can hear the right speaker and vice-versa we have a basic implementation of virtual surround up and running we're now looking at increasing the size of that sweet spot which is to say making the area you need to be in to feel the 3D effect larger and we're also working to boost the sense of Locality.

Headphone audio is the current gold standard for 3D Audio on PlayStation 5 but we're going to see what we can do to bring virtual surround sound to a similar level after which we'll start in on setups with more speakers such as 6 channel surround sound.

It's now to the point where some of the PlayStation 5 games in development are extensively using these systems one of the game demos allows you to toggle between conventional PlayStation 4 style stereo audio and our new 3D Audio.

I listened with just an ordinary pair of over the ear headphones and wow I could feel a difference.

3D Audio has that dimensional feel to it conventional stereo audio feels smashed flat by comparison the improvement is obvious.

So a big advancement but have I entered the matrix does my brain believe I'm really there like I was talking about earlier when I explained our target of presence.

Well the answer is no but you've probably caught on to what's missing here namely whose HRTF was being used.

It wasn't mine. It was the default HRTF.

The audio team analyzed the hundreds that they measured and chose the one they felt with the closest fit to the total game playing audience.

This shows a piece of the default HRTF on the left and my HRTF on the right you can see that the general features are much the same but the details are quite different.

With the default HRTF as I said the 3D Audio sounds pretty great when I use my HRTF though the audio reaches a higher level of realism.

Which is to say that when using headphones and my HRTF I occasionally get fooled and even think a sound is coming from the real world when it's actually coming from the game.

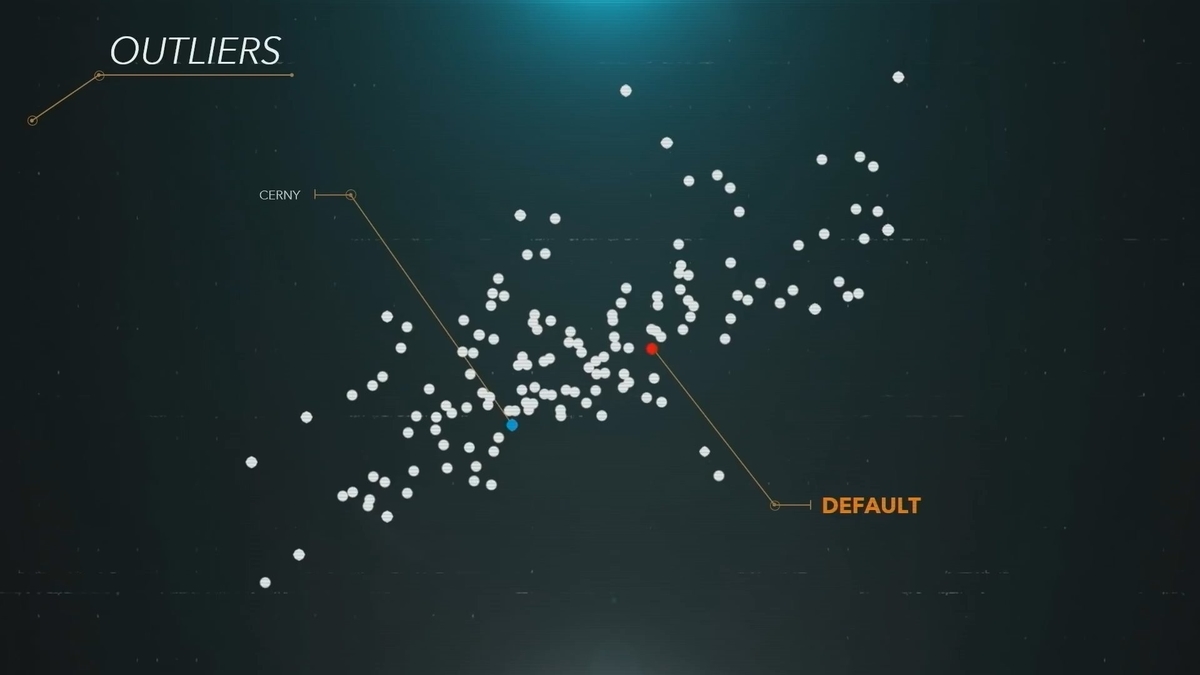

A corollary to this is that there are a few people whose HRTFs are sufficiently far from the default HRTF that's the red dot here that they can toggle between PS4 style and PS5 style audio and not sense much difference.

I've had a few people describe the PlayStation 5 3D Audio is sounding like a bit better stereo audio presumably they're the ones at the very edges of this diagram.

Which means what HRTF you're using is key.

I'd like everyone to be able to experience what I'm experiencing but obviously it's not possible to measure the HRTF of every PlayStation user.

That means HRTF selection and synthesis are going to be big topics going forward as the Tempest Technology matures at PlayStation 5 launch will be offering a choice of five HRTFs there's a simple test where you pick the one that gives you the best locality.

That's just the first step though this is an open-ended research topic.

Maybe you'll be sending us a photo of your ear and we'll choose a neural network to pick the closest HRTF in our library.

Maybe you'll be sending us a video of your ears and your head and we'll make a 3D model of them and synthesize the HRTF.

Maybe you'll play an audio game to tune your HRTF will be subtly changing it as you play and home in on the HRTF that gives you the highest score meaning that it matches you the best.

This is a journey we'll all be taking together over the next few years ultimately we're committing to enabling everyone to experience that next level of realism.

Hopefully I've been able to illustrate a bit about our design and decision making process today and why PlayStation 5 has the feature set that it does.

Now comes the fun part we get to see how the development community takes advantage of that feature set.

I'm hoping for the completely unexpected will it come from Audio Ray-Tracing the capabilities of the SSD or something else I guess we'll find out soon enough.

Thank you for your time today.

Original Video